In this time series project, you will forecast Walmart sales over time using the powerful, fast, and flexible time series forecasting library Greykite that helps automate time series problems.

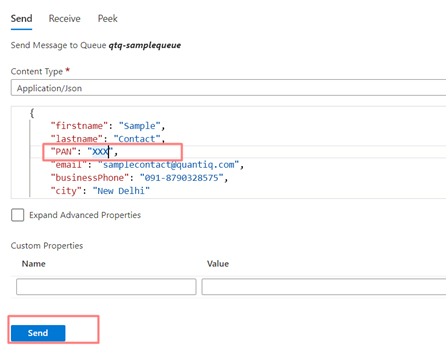

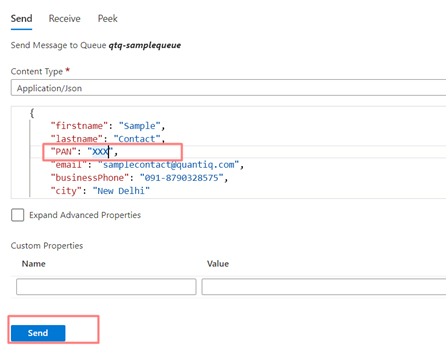

validate azure How can we send radar to Venus and reflect it back on earth? Create a spreadsheet of scenarios of input data and expected results and validate these with the business customer.

These Modules can be installed by running the following commands in the Command Prompt: The following files should be created in your project directory in order to set up ETL Using Python: This file is required for setting up all Source and Target Database Connection Strings in the process to set up ETL using Python. It integrates with your preferred parser to provide idiomatic methods of navigating, searching and modifying the parse tree.

Safe to ride aluminium bike with big toptube dent? It also comes with a web dashboard that allows users to track all ETL jobs. We need to have tests to uncover such integrity constraint violations. There are two possibilities, an entity might be present or absent as per the Data Model design. For each category below, we first verify if the metadata defined for the target system meets the business requirement and secondly, if the tables and field definitions were created accurately. Read along to find out in-depth information about setting up ETL using Python. As testers for ETL or data migration projects, it adds tremendous value if we uncover data quality issues that might get propagated to the target systems and disrupt the entire business processes. In this example, some of the data is stored in CSV files while others are in JSON files.

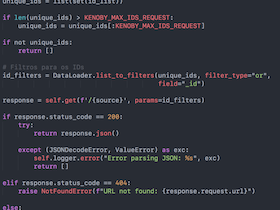

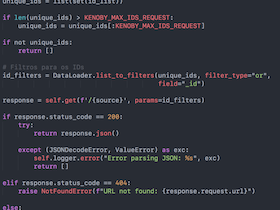

integration sdks apis lex santos filipe etl Hevo also allows integrating data from non-native sources using Hevosin-built REST API & Webhooks Connector. There are three groupings for this: In Metadata validation, we validate that the Table and Column data type definitions for the target are correctly designed, and once designed they are executed as per the data model design specifications. try: A few of the metadata checks are given below: (ii) Delta change:These tests uncover defects that arise when the project is in progress and mid-way there are changes to the source systems metadata and did not get implemented in target systems. Why is the comparative of "sacer" not attested? Hence, it is considered to be suitable for only simple ETL Using Python operations that do not require complex transformations or analysis. Manually programming and setting up each of the processes involved in setting up ETL using Python would require an immense engineering bandwidth. All articles are copyrighted and cannot be reproduced without permission. Another test could be to confirm that the date formats match between the source and target system. Creating an ETL pipeline for such data from scratch is a complex process since businesses will have to utilize a high amount of resources in creating this pipeline and then ensure that it is able to keep up with the high data volume and Schema variations.

It was created to fill C++ and Java gaps discovered while working with Googles servers and distributed systems.

Termination date should be null if Employee Active status is True/Deceased. Even though it is not an ETL tool itself, it can be used to set up ETL Using Python. The log indicates that you have started and ended the Extract phase. A logging entry needs to be established before loading. Have tests to validate this. Recommended Reading=> Data Migration Testing,ETL Testing Data Warehouse Testing Tutorial. Also, it does not perform any transformations.

It can extract data from a variety of sources in formats such as CSV, JSON, XML, XLS, SQL, and others. This means that all their data is stored across the databases of various platforms that they use.

to be performed. Thanks for contributing an answer to Stack Overflow!

Now document the corresponding values for each of these rows that are expected to match in the target tables. However, several libraries are currently in development, including Nokogiri,Kiba, and Squares ETL package. What does "Check the proof of theorem x" mean as a comment from a referee on a mathematical paper? It also accepts data from sources other than Python, such as CSV/JSON/HDF5 files, SQL databases, data from remote machines, and the Hadoop File System.

etl remap remove else:

Aggregate functions are built in the functionality of the database. Bangalore? It is especially simple to use if you have prior experience with Python. Why Validate Data For Data Migration Projects? We have two types of tests possible here: Note: It is best to highlight (color code) matching data entities in the Data Mapping sheet for quick reference.

etl transformations warehousing validation = df The [shopping] and [shop] tags are being burninated, Calling a function of a module by using its name (a string), How to print a number using commas as thousands separators.

avoiding orm mongodb etl pitfalls

avoiding orm mongodb etl pitfalls Simple data validation test is to verify all 200 million rows of data are available in the target system. The ETL process is coded in Python by the developer when using Pygrametl. Here, we mainly validate the integrity constraints like Foreign key, Primary key reference, Unique, Default, etc. Python to Microsoft SQL Server Connector. validation['chk'] = validation['Invoice ID'].apply(lambda x: True if x in df else False) They can maintain multiple versions with color highlights to form inputs for any of the tests above. In this article, you have learned about Setting up ETL using Python. In order to perform a proper analysis, the first step is to create a Single Source of Truth for all their data.

Apache Airflow is a Python-based Open-Source Workflow Management and Automation tool that was developed by Airbnb. Pass the file name as the argument as below : filename ='C:\\Users\\nfinity\\Downloads\\Data sets\\supermarket_sales.csv'. Pygrametl is a Python framework for creating Extract-Transform-Load (ETL) processes. Example: The address of a student in the Student table was 2000 characters in the source system. Another test is to verify that the TotalDollarSpend is rightly calculated with no defects in rounding the values or maximum value overflows. How to test multiple variables for equality against a single value?

Find centralized, trusted content and collaborate around the technologies you use most.

etl scaling pipeline dataflow sdk tensorflow python beam renamed_data['buy_date'].head(), Here we are going to validating the data to checking the missing values, below code will loop the data column values and check if the columns has any missing value is as follow below, for col in df.columns: Also, take into consideration, business logic to weed out such data. More information on Apache Airflow can be foundhere. In this type of test, identify columns that should have unique values as per the data model. In most of the production environments , data validation is a key step in data pipelines. Businesses can instead use automated platforms like Hevo. print("{} has NO missing value! PATH issue with pytest 'ImportError: No module named YadaYadaYada'. It is considered to be one of the most sophisticated tools that house various powerful features for creating complex ETL data pipelines. The Data Mapping table will give you clarity on what tables has these constraints. April 5th, 2021 Java serves as the foundation for several other big data tools, including Hadoop and Spark. Validate if there are encoded values in the source system and verify if the data is rightly populated post the ETL or data migration job into the target system. How can I open multiple files using "with open" in Python? This file should contain all the code that helps establish connections among the correct databases and run the required queries in order to set up ETL using Python. Data validation is a form of, The below codes can be run in Jupyter notebook, or any python console, Step 5: Check Data Type convert as Date column, Step 6: validate data to check missing values, Learn Hyperparameter Tuning for Neural Networks with PyTorch, Hands-On Approach to Causal Inference in Machine Learning, Azure Text Analytics for Medical Search Engine Deployment, Data Science Project - Instacart Market Basket Analysis, Build a Churn Prediction Model using Ensemble Learning, End-to-End Speech Emotion Recognition Project using ANN, Recommender System Machine Learning Project for Beginners-1, Time Series Python Project using Greykite and Neural Prophet, Image Segmentation using Mask R-CNN with Tensorflow, Isolation Forest Model and LOF for Anomaly Detection in Python, Walmart Sales Forecasting Data Science Project, Credit Card Fraud Detection Using Machine Learning, Resume Parser Python Project for Data Science, Retail Price Optimization Algorithm Machine Learning, Store Item Demand Forecasting Deep Learning Project, Handwritten Digit Recognition Code Project, Machine Learning Projects for Beginners with Source Code, Data Science Projects for Beginners with Source Code, Big Data Projects for Beginners with Source Code, IoT Projects for Beginners with Source Code, Data Science Interview Questions and Answers, Pandas Create New Column based on Multiple Condition, Optimize Logistic Regression Hyper Parameters, Drop Out Highly Correlated Features in Python, Convert Categorical Variable to Numeric Pandas, Evaluate Performance Metrics for Machine Learning Models. Start with documenting all the tables and their entities in the source system in a spreadsheet.

How did Wanda learn of America Chavez and her powers? Save countless engineering hours by trying out our 14-day full feature access free trial! Another possibility is the absence of data. CustomerType field in Customers table has data only in the source system and not in the target system. With the Source & Destination selected, Hevo can get you started quickly with Data Ingestion & Replicationin just a few minutes.

These Modules can be installed by running the following commands in the Command Prompt: The following files should be created in your project directory in order to set up ETL Using Python: This file is required for setting up all Source and Target Database Connection Strings in the process to set up ETL using Python. It integrates with your preferred parser to provide idiomatic methods of navigating, searching and modifying the parse tree.

These Modules can be installed by running the following commands in the Command Prompt: The following files should be created in your project directory in order to set up ETL Using Python: This file is required for setting up all Source and Target Database Connection Strings in the process to set up ETL using Python. It integrates with your preferred parser to provide idiomatic methods of navigating, searching and modifying the parse tree.  Safe to ride aluminium bike with big toptube dent? It also comes with a web dashboard that allows users to track all ETL jobs. We need to have tests to uncover such integrity constraint violations. There are two possibilities, an entity might be present or absent as per the Data Model design. For each category below, we first verify if the metadata defined for the target system meets the business requirement and secondly, if the tables and field definitions were created accurately. Read along to find out in-depth information about setting up ETL using Python. As testers for ETL or data migration projects, it adds tremendous value if we uncover data quality issues that might get propagated to the target systems and disrupt the entire business processes. In this example, some of the data is stored in CSV files while others are in JSON files. integration sdks apis lex santos filipe etl Hevo also allows integrating data from non-native sources using Hevosin-built REST API & Webhooks Connector. There are three groupings for this: In Metadata validation, we validate that the Table and Column data type definitions for the target are correctly designed, and once designed they are executed as per the data model design specifications. try: A few of the metadata checks are given below: (ii) Delta change:These tests uncover defects that arise when the project is in progress and mid-way there are changes to the source systems metadata and did not get implemented in target systems. Why is the comparative of "sacer" not attested? Hence, it is considered to be suitable for only simple ETL Using Python operations that do not require complex transformations or analysis. Manually programming and setting up each of the processes involved in setting up ETL using Python would require an immense engineering bandwidth. All articles are copyrighted and cannot be reproduced without permission. Another test could be to confirm that the date formats match between the source and target system. Creating an ETL pipeline for such data from scratch is a complex process since businesses will have to utilize a high amount of resources in creating this pipeline and then ensure that it is able to keep up with the high data volume and Schema variations.

Safe to ride aluminium bike with big toptube dent? It also comes with a web dashboard that allows users to track all ETL jobs. We need to have tests to uncover such integrity constraint violations. There are two possibilities, an entity might be present or absent as per the Data Model design. For each category below, we first verify if the metadata defined for the target system meets the business requirement and secondly, if the tables and field definitions were created accurately. Read along to find out in-depth information about setting up ETL using Python. As testers for ETL or data migration projects, it adds tremendous value if we uncover data quality issues that might get propagated to the target systems and disrupt the entire business processes. In this example, some of the data is stored in CSV files while others are in JSON files. integration sdks apis lex santos filipe etl Hevo also allows integrating data from non-native sources using Hevosin-built REST API & Webhooks Connector. There are three groupings for this: In Metadata validation, we validate that the Table and Column data type definitions for the target are correctly designed, and once designed they are executed as per the data model design specifications. try: A few of the metadata checks are given below: (ii) Delta change:These tests uncover defects that arise when the project is in progress and mid-way there are changes to the source systems metadata and did not get implemented in target systems. Why is the comparative of "sacer" not attested? Hence, it is considered to be suitable for only simple ETL Using Python operations that do not require complex transformations or analysis. Manually programming and setting up each of the processes involved in setting up ETL using Python would require an immense engineering bandwidth. All articles are copyrighted and cannot be reproduced without permission. Another test could be to confirm that the date formats match between the source and target system. Creating an ETL pipeline for such data from scratch is a complex process since businesses will have to utilize a high amount of resources in creating this pipeline and then ensure that it is able to keep up with the high data volume and Schema variations.  It was created to fill C++ and Java gaps discovered while working with Googles servers and distributed systems.

It was created to fill C++ and Java gaps discovered while working with Googles servers and distributed systems.  Termination date should be null if Employee Active status is True/Deceased. Even though it is not an ETL tool itself, it can be used to set up ETL Using Python. The log indicates that you have started and ended the Extract phase. A logging entry needs to be established before loading. Have tests to validate this. Recommended Reading=> Data Migration Testing,ETL Testing Data Warehouse Testing Tutorial. Also, it does not perform any transformations.

Termination date should be null if Employee Active status is True/Deceased. Even though it is not an ETL tool itself, it can be used to set up ETL Using Python. The log indicates that you have started and ended the Extract phase. A logging entry needs to be established before loading. Have tests to validate this. Recommended Reading=> Data Migration Testing,ETL Testing Data Warehouse Testing Tutorial. Also, it does not perform any transformations.  It can extract data from a variety of sources in formats such as CSV, JSON, XML, XLS, SQL, and others. This means that all their data is stored across the databases of various platforms that they use.

It can extract data from a variety of sources in formats such as CSV, JSON, XML, XLS, SQL, and others. This means that all their data is stored across the databases of various platforms that they use.  to be performed. Thanks for contributing an answer to Stack Overflow!

to be performed. Thanks for contributing an answer to Stack Overflow!  Now document the corresponding values for each of these rows that are expected to match in the target tables. However, several libraries are currently in development, including Nokogiri,Kiba, and Squares ETL package. What does "Check the proof of theorem x" mean as a comment from a referee on a mathematical paper? It also accepts data from sources other than Python, such as CSV/JSON/HDF5 files, SQL databases, data from remote machines, and the Hadoop File System. etl remap remove else: Aggregate functions are built in the functionality of the database. Bangalore? It is especially simple to use if you have prior experience with Python. Why Validate Data For Data Migration Projects? We have two types of tests possible here: Note: It is best to highlight (color code) matching data entities in the Data Mapping sheet for quick reference. etl transformations warehousing validation = df The [shopping] and [shop] tags are being burninated, Calling a function of a module by using its name (a string), How to print a number using commas as thousands separators.

Now document the corresponding values for each of these rows that are expected to match in the target tables. However, several libraries are currently in development, including Nokogiri,Kiba, and Squares ETL package. What does "Check the proof of theorem x" mean as a comment from a referee on a mathematical paper? It also accepts data from sources other than Python, such as CSV/JSON/HDF5 files, SQL databases, data from remote machines, and the Hadoop File System. etl remap remove else: Aggregate functions are built in the functionality of the database. Bangalore? It is especially simple to use if you have prior experience with Python. Why Validate Data For Data Migration Projects? We have two types of tests possible here: Note: It is best to highlight (color code) matching data entities in the Data Mapping sheet for quick reference. etl transformations warehousing validation = df The [shopping] and [shop] tags are being burninated, Calling a function of a module by using its name (a string), How to print a number using commas as thousands separators.  avoiding orm mongodb etl pitfalls Simple data validation test is to verify all 200 million rows of data are available in the target system. The ETL process is coded in Python by the developer when using Pygrametl. Here, we mainly validate the integrity constraints like Foreign key, Primary key reference, Unique, Default, etc. Python to Microsoft SQL Server Connector. validation['chk'] = validation['Invoice ID'].apply(lambda x: True if x in df else False) They can maintain multiple versions with color highlights to form inputs for any of the tests above. In this article, you have learned about Setting up ETL using Python. In order to perform a proper analysis, the first step is to create a Single Source of Truth for all their data. Apache Airflow is a Python-based Open-Source Workflow Management and Automation tool that was developed by Airbnb. Pass the file name as the argument as below : filename ='C:\\Users\\nfinity\\Downloads\\Data sets\\supermarket_sales.csv'. Pygrametl is a Python framework for creating Extract-Transform-Load (ETL) processes. Example: The address of a student in the Student table was 2000 characters in the source system. Another test is to verify that the TotalDollarSpend is rightly calculated with no defects in rounding the values or maximum value overflows. How to test multiple variables for equality against a single value? Find centralized, trusted content and collaborate around the technologies you use most. etl scaling pipeline dataflow sdk tensorflow python beam renamed_data['buy_date'].head(), Here we are going to validating the data to checking the missing values, below code will loop the data column values and check if the columns has any missing value is as follow below, for col in df.columns: Also, take into consideration, business logic to weed out such data. More information on Apache Airflow can be foundhere. In this type of test, identify columns that should have unique values as per the data model. In most of the production environments , data validation is a key step in data pipelines. Businesses can instead use automated platforms like Hevo. print("{} has NO missing value! PATH issue with pytest 'ImportError: No module named YadaYadaYada'. It is considered to be one of the most sophisticated tools that house various powerful features for creating complex ETL data pipelines. The Data Mapping table will give you clarity on what tables has these constraints. April 5th, 2021 Java serves as the foundation for several other big data tools, including Hadoop and Spark. Validate if there are encoded values in the source system and verify if the data is rightly populated post the ETL or data migration job into the target system. How can I open multiple files using "with open" in Python? This file should contain all the code that helps establish connections among the correct databases and run the required queries in order to set up ETL using Python. Data validation is a form of, The below codes can be run in Jupyter notebook, or any python console, Step 5: Check Data Type convert as Date column, Step 6: validate data to check missing values, Learn Hyperparameter Tuning for Neural Networks with PyTorch, Hands-On Approach to Causal Inference in Machine Learning, Azure Text Analytics for Medical Search Engine Deployment, Data Science Project - Instacart Market Basket Analysis, Build a Churn Prediction Model using Ensemble Learning, End-to-End Speech Emotion Recognition Project using ANN, Recommender System Machine Learning Project for Beginners-1, Time Series Python Project using Greykite and Neural Prophet, Image Segmentation using Mask R-CNN with Tensorflow, Isolation Forest Model and LOF for Anomaly Detection in Python, Walmart Sales Forecasting Data Science Project, Credit Card Fraud Detection Using Machine Learning, Resume Parser Python Project for Data Science, Retail Price Optimization Algorithm Machine Learning, Store Item Demand Forecasting Deep Learning Project, Handwritten Digit Recognition Code Project, Machine Learning Projects for Beginners with Source Code, Data Science Projects for Beginners with Source Code, Big Data Projects for Beginners with Source Code, IoT Projects for Beginners with Source Code, Data Science Interview Questions and Answers, Pandas Create New Column based on Multiple Condition, Optimize Logistic Regression Hyper Parameters, Drop Out Highly Correlated Features in Python, Convert Categorical Variable to Numeric Pandas, Evaluate Performance Metrics for Machine Learning Models. Start with documenting all the tables and their entities in the source system in a spreadsheet.

avoiding orm mongodb etl pitfalls Simple data validation test is to verify all 200 million rows of data are available in the target system. The ETL process is coded in Python by the developer when using Pygrametl. Here, we mainly validate the integrity constraints like Foreign key, Primary key reference, Unique, Default, etc. Python to Microsoft SQL Server Connector. validation['chk'] = validation['Invoice ID'].apply(lambda x: True if x in df else False) They can maintain multiple versions with color highlights to form inputs for any of the tests above. In this article, you have learned about Setting up ETL using Python. In order to perform a proper analysis, the first step is to create a Single Source of Truth for all their data. Apache Airflow is a Python-based Open-Source Workflow Management and Automation tool that was developed by Airbnb. Pass the file name as the argument as below : filename ='C:\\Users\\nfinity\\Downloads\\Data sets\\supermarket_sales.csv'. Pygrametl is a Python framework for creating Extract-Transform-Load (ETL) processes. Example: The address of a student in the Student table was 2000 characters in the source system. Another test is to verify that the TotalDollarSpend is rightly calculated with no defects in rounding the values or maximum value overflows. How to test multiple variables for equality against a single value? Find centralized, trusted content and collaborate around the technologies you use most. etl scaling pipeline dataflow sdk tensorflow python beam renamed_data['buy_date'].head(), Here we are going to validating the data to checking the missing values, below code will loop the data column values and check if the columns has any missing value is as follow below, for col in df.columns: Also, take into consideration, business logic to weed out such data. More information on Apache Airflow can be foundhere. In this type of test, identify columns that should have unique values as per the data model. In most of the production environments , data validation is a key step in data pipelines. Businesses can instead use automated platforms like Hevo. print("{} has NO missing value! PATH issue with pytest 'ImportError: No module named YadaYadaYada'. It is considered to be one of the most sophisticated tools that house various powerful features for creating complex ETL data pipelines. The Data Mapping table will give you clarity on what tables has these constraints. April 5th, 2021 Java serves as the foundation for several other big data tools, including Hadoop and Spark. Validate if there are encoded values in the source system and verify if the data is rightly populated post the ETL or data migration job into the target system. How can I open multiple files using "with open" in Python? This file should contain all the code that helps establish connections among the correct databases and run the required queries in order to set up ETL using Python. Data validation is a form of, The below codes can be run in Jupyter notebook, or any python console, Step 5: Check Data Type convert as Date column, Step 6: validate data to check missing values, Learn Hyperparameter Tuning for Neural Networks with PyTorch, Hands-On Approach to Causal Inference in Machine Learning, Azure Text Analytics for Medical Search Engine Deployment, Data Science Project - Instacart Market Basket Analysis, Build a Churn Prediction Model using Ensemble Learning, End-to-End Speech Emotion Recognition Project using ANN, Recommender System Machine Learning Project for Beginners-1, Time Series Python Project using Greykite and Neural Prophet, Image Segmentation using Mask R-CNN with Tensorflow, Isolation Forest Model and LOF for Anomaly Detection in Python, Walmart Sales Forecasting Data Science Project, Credit Card Fraud Detection Using Machine Learning, Resume Parser Python Project for Data Science, Retail Price Optimization Algorithm Machine Learning, Store Item Demand Forecasting Deep Learning Project, Handwritten Digit Recognition Code Project, Machine Learning Projects for Beginners with Source Code, Data Science Projects for Beginners with Source Code, Big Data Projects for Beginners with Source Code, IoT Projects for Beginners with Source Code, Data Science Interview Questions and Answers, Pandas Create New Column based on Multiple Condition, Optimize Logistic Regression Hyper Parameters, Drop Out Highly Correlated Features in Python, Convert Categorical Variable to Numeric Pandas, Evaluate Performance Metrics for Machine Learning Models. Start with documenting all the tables and their entities in the source system in a spreadsheet.  How did Wanda learn of America Chavez and her powers? Save countless engineering hours by trying out our 14-day full feature access free trial! Another possibility is the absence of data. CustomerType field in Customers table has data only in the source system and not in the target system. With the Source & Destination selected, Hevo can get you started quickly with Data Ingestion & Replicationin just a few minutes.

How did Wanda learn of America Chavez and her powers? Save countless engineering hours by trying out our 14-day full feature access free trial! Another possibility is the absence of data. CustomerType field in Customers table has data only in the source system and not in the target system. With the Source & Destination selected, Hevo can get you started quickly with Data Ingestion & Replicationin just a few minutes.