Under Browse, look for Active Directory and click on it. Azure Data Lake Account for Hadoop For information on creating a Tenant ID in Azure, see Quickstart: Create a new tenant in Azure Active Directory.

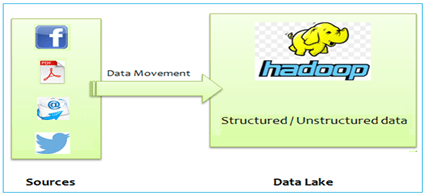

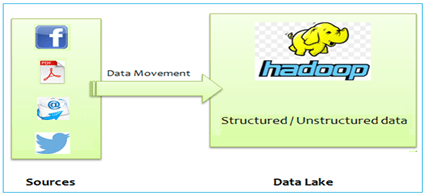

data lake hortonworks access hadoop hdp platform management open To reduce data transit over the network, Hadoop pushes data elaboration to the storage layer, where data is physically stored. 5.

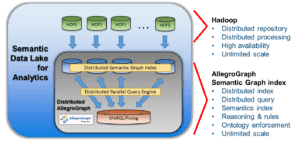

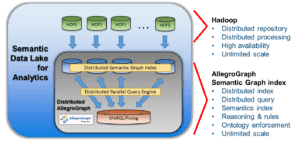

7 steps to a successful data lake implementation - TechTarget And we have Edge Nodes that are mainly used for data landing and contact point from outside world.

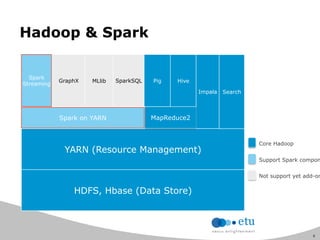

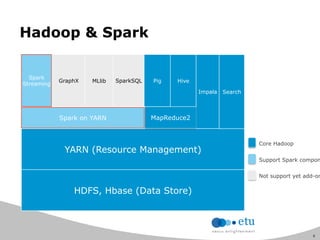

Data Lake data lake dream application  Big data architecture: Hadoop and Data Lake

Big data architecture: Hadoop and Data Lake Requirements: List the requirements for new environments in OCI. Raw Data .

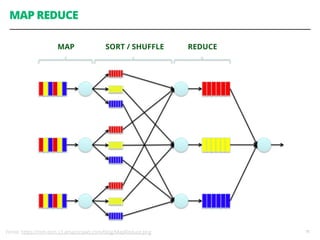

hadoop Data Modeling in Hadoop - Hadoop Application Architectures [Book] Chapter 1. Heres a Hadoop is an High Distributed File Systems (HDFS) and it distributes data in its nodes for both storage and elaboration.

Data Lake It stores files in HDFS (Hadoop distributed file system) however it doesnt qualify as a relational database. Relative databases store data in tables outlined by the precise schema. Hadoop will store unstructured, semi-structured and structured data whereas ancient databases will store solely structured data.

Data Lake from SAP data hadoop lake emc splash makes bundle scale enables pivotal lakes announced update second storage Related Terminology Is Critical. Mix Disparate Data Sources. Another assumption about big data that has the potential for catastrophe, is that data scientists must work in Hadoop, the ubiquitous data processing framework. Qlik Replicate also can feed Kafka Hadoop flows for real-time big data streaming. You simply add more clusters as you need more space. from here). It is a nice environment to practice the Hadoop ecosystem components and Spark.

HDFS is also schema-less, which means it can support files of any type and format. Usually one would want to bring this type of data to prepare a Data Lake on Hadoop. Data Lake Layer . Apache Hadoop clusters also converge computing resources close to storage, facilitating faster processing of the large stored data sets. The implementation is part of the open source project chombo. Include hadoop-aws JAR in the classpath. Grant permissions to the app: Click on Permissions for the app, and then add Azure Data Lake and Windows Azure Service Management API permissions.

What are the steps in developing a data lake in Hadoop Some data lake architecture providers use a Hadoop-based data management platform consisting of one or more Hadoop clusters.

Data Lake & Hadoop : How can they power your Analytics? Azure Data Lake Storage Gen2 The phrase data lakes has become popular for describing the moving of data into Hadoop to create a repository for large quantities of structured and unstructured data in native formats.

If Hadoop-based data lakes are to succeed, you'll need to ingest and retain raw data in a landing zone with enough metadata tagging to know what it is and where it's from. Once app is created, note down the Appplication ID of the app. There is a multi-step workflow to implement Data Lakes in OCI using Big Data Service.

Delta Lake They e.g.

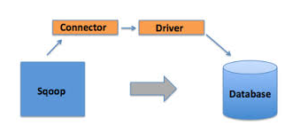

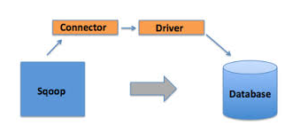

Upload using servicesAzure Data Factory. The Azure Data Factory service is a fully managed service for composing data: storage, processing, and movement services into streamlined, adaptable, and reliable data production pipelines.Apache Sqoop. Sqoop is a tool designed to transfer data between Hadoop and relational databases. Development SDKs Given the requirements, object-based stores have become the de facto choice for core data lake storage. Oracle Big Data Service is an automated service based on Cloudera Enterprise that provides a cost-effective Hadoop data lake environmenta secure place to store and analyze data of different types from any source. 10x compression of existing data and save storage cost. This is a problem faced in many Hadoop or Hive based Big Data analytic project.

data hadoop lake informatica manage easily create connectivity cloud connector lakes c09 adl://

.azuredatalakestore.net// Using the Summary: A Data Lake is a storage repository that can store large amount of structured, semi-structured, and unstructured data. Includes graphical illustrations and visual explanations for Hadoop commands and parameters. 2. Big Data Lake | Giorgio Giussani Click Enabled next to Hierarchical namespace under Data Lake Storage Gen2. Learn about Hadoop-based Data Lakes in Oracle Cloud Apache Sqoop: This is a tool which is used to import RDBMS data to Hadoop. Hadoop hadoop - Building Data Lake from scratch - Stack Overflow hadoop elaborate Use Data Lake Storage Gen1 with Hadoop in Azure HDInsight  Data Lake Storage Gen2 combines the capabilities of Azure Blob storage and Azure Data Lake Storage Gen1. In this process, Data Box, Data Box Disk as well as Data Box Heavy devices help users transfer huge volumes of data to Azure offline. Try it now.

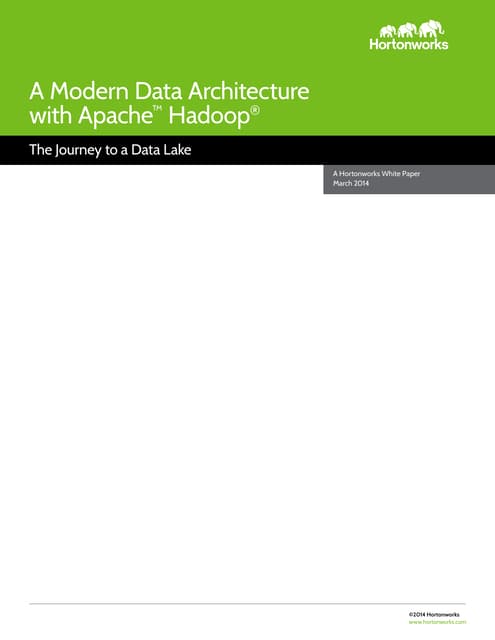

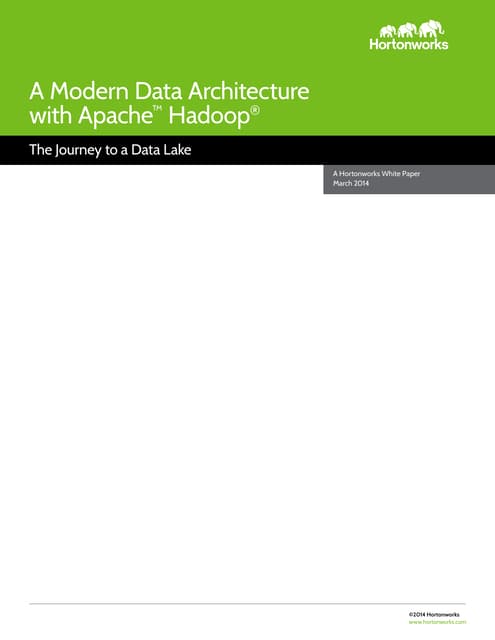

Data Lake Storage Gen2 combines the capabilities of Azure Blob storage and Azure Data Lake Storage Gen1. In this process, Data Box, Data Box Disk as well as Data Box Heavy devices help users transfer huge volumes of data to Azure offline. Try it now.  Data Lake First thing, you will need to install docker (e.g. Preparation. hadoop sql Azure Data Lake Storage: The dark blue shading represents new features introduced with ADLS Gen2. A data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. The Data Lake is a data-centered architecture featuring a repository capable of storing vast quantities of data in various formats. The data lake concept is closely tied to Apache Hadoop and its ecosystem of open source projects. In this section, you create an HDInsight Hadoop Linux cluster with Data Lake Storage Gen1 as the default storage. A data lake is a flat architecture that holds large amounts of raw data. Hadoop Azure Data Lake Support I want to introduce our speakers today real quickly. How to Rock Data Quality Checks in the Data Lake - DZone allegrograph hadoop data lake overview analytics semantic Azure Data Lake includes all the capabilities required to make it easy for developers, data scientists, and analysts to store data of any size, shape, and speed, and do all types of processing and analytics across platforms and languages. Hadoop uses a cluster of distributed servers for data storage. tamr hadoop Grant permissions to the app: Click on Permissions for the app, and then add Azure Data Lake and Windows Azure Service Management API permissions. This column is the data type that you use in the CREATE HADOOP TABLE table definition statement. Oracle Big Data Service provides a Hadoop stack that includes Apache Ambari, Apache Hadoop, Apache HBase, Apache Hive, Apache Spark, and other services for working with and securing big data. Hadoop data lake: A Hadoop data lake is a data management platform comprising one or more Hadoop clusters used principally to process and store non-relational data such as log files , Internet clickstream records, sensor data, JSON objects, images and social media posts. N/A: 4464acc4-0c44-46ff-560c-65c41354405f: Access ID: String: The Application ID of the application in the Azure Active Directory. Create Data Lake Create Web Application. Use Azure Data Lake Storage Gen2 with Azure HDInsight clusters Brian Dirking: Hello and welcome to this webinar: Migrating On Premises Hadoop to a Cloud Data Lake with Databricks and AWS. A larger number of downstream users can then treat these lakeshore marts as an authoritative source for that context. Second, we have Igor Alekseev, who is partner SA data and analytics at AWS. Whether data is structured, unstructured, or semi-structured, it is loaded and stored as-is. Data Lake Finally, we will explore our data in HDFS using Spark and create simple visualization. The Aspirational Data Lake Value Proposition. evolute datalake The phrase data lakes has become popular for describing the moving of data into Hadoop to create a repository for large quantities of structured and unstructured data in native formats. Going back 8 years, I still remember the days when I was adopting Big Data frameworks like Hadoop and Spark. It can store structured, semi-structured, or unstructured data, which means data can be kept in a more flexible format for future use. Use the Azure Blob Filesystem driver (ABFS) to connect to Azure Blob Storage and Azure Data Lake Storage Gen2 from Databricks. Four Best Practices for Setting up Your Data Lake in Hadoop Fill in the Task name and Task description and select the appropriate task schedule. A data lake works as an enabler for businesses for data-driven decision-making or insights. Setup a Data Lake Solution. Once app is created, note down the Appplication ID of the app. The analytics layer comprises Azure Data Lake Analytics and HDInsight, which is a cloud-based analytics service. 6-month Contract to Hire. Data from webserver logs, databases, social media, and third-party data is ingested into the Data Lake. A data lake is a central location that handles a massive volume of data in its native, raw format and organizes large volumes of highly diverse data. In part four of this series I want to talk about the confusion in the market I am seeing around the data lake phrase, including a look at how the term seems to be evolving within organizations based on my recent interactions. Now, today, data lakes are providing a major data source for analytics in machine learning. What is a Data Lake Pivotal HD offers a wide variety of data processing technologies for Hadoop real-time, interactive, and batch. Hadoop Data Lake - SnapLogic data lake hadoop access help advantage stored accessed processed once using Azure Data Lake Account for Hadoop - SnapLogic Documentation It is an ideal environment for experimenting with different ideas and/or datasets. Amazing Things to Do With a Hadoop-Based Data Lake Heitmeyer Consulting hiring Data Lake/Hadoop Admin in Databricks Delta Lake Database on top of a Data Lake It can also be used for staging data from a data lake to be used by BI and other tools. Hadoop scales horizontally and cost-effectively and performs long-running operations spanning big data sets. Learn more about Qlik Replicate.

Data Lake First thing, you will need to install docker (e.g. Preparation. hadoop sql Azure Data Lake Storage: The dark blue shading represents new features introduced with ADLS Gen2. A data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. The Data Lake is a data-centered architecture featuring a repository capable of storing vast quantities of data in various formats. The data lake concept is closely tied to Apache Hadoop and its ecosystem of open source projects. In this section, you create an HDInsight Hadoop Linux cluster with Data Lake Storage Gen1 as the default storage. A data lake is a flat architecture that holds large amounts of raw data. Hadoop Azure Data Lake Support I want to introduce our speakers today real quickly. How to Rock Data Quality Checks in the Data Lake - DZone allegrograph hadoop data lake overview analytics semantic Azure Data Lake includes all the capabilities required to make it easy for developers, data scientists, and analysts to store data of any size, shape, and speed, and do all types of processing and analytics across platforms and languages. Hadoop uses a cluster of distributed servers for data storage. tamr hadoop Grant permissions to the app: Click on Permissions for the app, and then add Azure Data Lake and Windows Azure Service Management API permissions. This column is the data type that you use in the CREATE HADOOP TABLE table definition statement. Oracle Big Data Service provides a Hadoop stack that includes Apache Ambari, Apache Hadoop, Apache HBase, Apache Hive, Apache Spark, and other services for working with and securing big data. Hadoop data lake: A Hadoop data lake is a data management platform comprising one or more Hadoop clusters used principally to process and store non-relational data such as log files , Internet clickstream records, sensor data, JSON objects, images and social media posts. N/A: 4464acc4-0c44-46ff-560c-65c41354405f: Access ID: String: The Application ID of the application in the Azure Active Directory. Create Data Lake Create Web Application. Use Azure Data Lake Storage Gen2 with Azure HDInsight clusters Brian Dirking: Hello and welcome to this webinar: Migrating On Premises Hadoop to a Cloud Data Lake with Databricks and AWS. A larger number of downstream users can then treat these lakeshore marts as an authoritative source for that context. Second, we have Igor Alekseev, who is partner SA data and analytics at AWS. Whether data is structured, unstructured, or semi-structured, it is loaded and stored as-is. Data Lake Finally, we will explore our data in HDFS using Spark and create simple visualization. The Aspirational Data Lake Value Proposition. evolute datalake The phrase data lakes has become popular for describing the moving of data into Hadoop to create a repository for large quantities of structured and unstructured data in native formats. Going back 8 years, I still remember the days when I was adopting Big Data frameworks like Hadoop and Spark. It can store structured, semi-structured, or unstructured data, which means data can be kept in a more flexible format for future use. Use the Azure Blob Filesystem driver (ABFS) to connect to Azure Blob Storage and Azure Data Lake Storage Gen2 from Databricks. Four Best Practices for Setting up Your Data Lake in Hadoop Fill in the Task name and Task description and select the appropriate task schedule. A data lake works as an enabler for businesses for data-driven decision-making or insights. Setup a Data Lake Solution. Once app is created, note down the Appplication ID of the app. The analytics layer comprises Azure Data Lake Analytics and HDInsight, which is a cloud-based analytics service. 6-month Contract to Hire. Data from webserver logs, databases, social media, and third-party data is ingested into the Data Lake. A data lake is a central location that handles a massive volume of data in its native, raw format and organizes large volumes of highly diverse data. In part four of this series I want to talk about the confusion in the market I am seeing around the data lake phrase, including a look at how the term seems to be evolving within organizations based on my recent interactions. Now, today, data lakes are providing a major data source for analytics in machine learning. What is a Data Lake Pivotal HD offers a wide variety of data processing technologies for Hadoop real-time, interactive, and batch. Hadoop Data Lake - SnapLogic data lake hadoop access help advantage stored accessed processed once using Azure Data Lake Account for Hadoop - SnapLogic Documentation It is an ideal environment for experimenting with different ideas and/or datasets. Amazing Things to Do With a Hadoop-Based Data Lake Heitmeyer Consulting hiring Data Lake/Hadoop Admin in Databricks Delta Lake Database on top of a Data Lake It can also be used for staging data from a data lake to be used by BI and other tools. Hadoop scales horizontally and cost-effectively and performs long-running operations spanning big data sets. Learn more about Qlik Replicate.  Cloud-based data lake implementation helps the business to create cost-effective decisions. hadoop It helps an IT-driven business process. Data Lake Identify Data Sources. LoginAsk is here to help you access Create Data Lake Gen2 quickly and handle each specific case you encounter. Data lake is an architecture that allows you to store massive amounts of data in a central location. The Data Lake is a data-centered architecture featuring a repository capable of storing vast quantities of data in various formats. Yet, when trying to establish a Modern Data Architecture, organizations are vastly unprepared how to house, analyze and manipulate the

Cloud-based data lake implementation helps the business to create cost-effective decisions. hadoop It helps an IT-driven business process. Data Lake Identify Data Sources. LoginAsk is here to help you access Create Data Lake Gen2 quickly and handle each specific case you encounter. Data lake is an architecture that allows you to store massive amounts of data in a central location. The Data Lake is a data-centered architecture featuring a repository capable of storing vast quantities of data in various formats. Yet, when trying to establish a Modern Data Architecture, organizations are vastly unprepared how to house, analyze and manipulate the  Hadoop Azure Data Lake Support Three ways to turn old files into Hadoop data sets in a data lake.

Hadoop Azure Data Lake Support Three ways to turn old files into Hadoop data sets in a data lake.  Lists the data from Hadoop shell using s3a:// If all this works for you, we have successfully integrated Minio with Hadoop using s3a://.

Lists the data from Hadoop shell using s3a:// If all this works for you, we have successfully integrated Minio with Hadoop using s3a://.  Coming from a database background this adaptation was challenging for many reasons. Remember the name you create here - that is what you will add to your ADL account as authorized user. All necessary code and Data Lake hadoop azure-docs/hdinsight-hadoop-use-data-lake-storage-gen2 Best of all, with Qlik Replicate data architects can create and execute big data migration flow without doing any manual coding, sharply reducing reliance on developers and boosting the agility of your data lake analytics program.

Coming from a database background this adaptation was challenging for many reasons. Remember the name you create here - that is what you will add to your ADL account as authorized user. All necessary code and Data Lake hadoop azure-docs/hdinsight-hadoop-use-data-lake-storage-gen2 Best of all, with Qlik Replicate data architects can create and execute big data migration flow without doing any manual coding, sharply reducing reliance on developers and boosting the agility of your data lake analytics program.  Lakeshore Create a number of data marts each of which has a specific model for a single bounded context. Data lakes are essential to maintaining as well. Going back 8 years, I still remember the days when I was adopting Big Data frameworks like Hadoop and Spark. Hadoop legacy. Hadoop vs data lake implementation | by Jan Hadoop Since the core data lake enables your organization to scale, its necessary to have a single repository of Select a key duration and hit save. Hadoop is an important element of the architecture that is used to build data lakes. 6-month Contract to Hire. 2| Shipping Data Offline. It was created to address the storage problems that many Hadoop users were having with HDFS. Add integrated data storage EMC Isilon scale-out NAS to Pivotal HD and you have a shared data repository with multi-protocol support, including HDFS, to service a wide variety of data processing requests.

Lakeshore Create a number of data marts each of which has a specific model for a single bounded context. Data lakes are essential to maintaining as well. Going back 8 years, I still remember the days when I was adopting Big Data frameworks like Hadoop and Spark. Hadoop legacy. Hadoop vs data lake implementation | by Jan Hadoop Since the core data lake enables your organization to scale, its necessary to have a single repository of Select a key duration and hit save. Hadoop is an important element of the architecture that is used to build data lakes. 6-month Contract to Hire. 2| Shipping Data Offline. It was created to address the storage problems that many Hadoop users were having with HDFS. Add integrated data storage EMC Isilon scale-out NAS to Pivotal HD and you have a shared data repository with multi-protocol support, including HDFS, to service a wide variety of data processing requests.  It is very useful for time-to-market analytics solutions. (2) Hierarchical Namespace.

It is very useful for time-to-market analytics solutions. (2) Hierarchical Namespace.  Oracle Big Data Service is an automated service based on Cloudera Enterprise that provides a cost-effective Hadoop data lake environmenta secure place to store and analyze data of different types from any source. If data loading doesnt respect the Hadoop internal mechanisms, it is extremely easy that your Data lake will turn into a Data Swamp. Migrate From Hadoop On-Premise To Microsoft Azure Netflix, Hadoop, and big data Compression . All brain storming sessions of the data lake often hover around how to build a data lake using the power of the Apache Hadoop ecosystem. 9 best practices for building data lakes with Apache Hadoop Hadoop platforms start you with an HDFS file system, or equivalent. So, you'd have to have some ETL pipeline taking the unstructured data and converting it to structured data. A Hadoop data lake is one which has been built on a platform made up of Hadoop clusters. Learn about Hadoop-based Data Lakes in Oracle Cloud Data quality processes are based on setting functions, rules, and rule sets that standardize the validation of data across data sets. Hadoop

Oracle Big Data Service is an automated service based on Cloudera Enterprise that provides a cost-effective Hadoop data lake environmenta secure place to store and analyze data of different types from any source. If data loading doesnt respect the Hadoop internal mechanisms, it is extremely easy that your Data lake will turn into a Data Swamp. Migrate From Hadoop On-Premise To Microsoft Azure Netflix, Hadoop, and big data Compression . All brain storming sessions of the data lake often hover around how to build a data lake using the power of the Apache Hadoop ecosystem. 9 best practices for building data lakes with Apache Hadoop Hadoop platforms start you with an HDFS file system, or equivalent. So, you'd have to have some ETL pipeline taking the unstructured data and converting it to structured data. A Hadoop data lake is one which has been built on a platform made up of Hadoop clusters. Learn about Hadoop-based Data Lakes in Oracle Cloud Data quality processes are based on setting functions, rules, and rule sets that standardize the validation of data across data sets. Hadoop

Under Browse, look for Active Directory and click on it. Azure Data Lake Account for Hadoop For information on creating a Tenant ID in Azure, see Quickstart: Create a new tenant in Azure Active Directory. data lake hortonworks access hadoop hdp platform management open To reduce data transit over the network, Hadoop pushes data elaboration to the storage layer, where data is physically stored. 5. 7 steps to a successful data lake implementation - TechTarget And we have Edge Nodes that are mainly used for data landing and contact point from outside world. Data Lake data lake dream application

Under Browse, look for Active Directory and click on it. Azure Data Lake Account for Hadoop For information on creating a Tenant ID in Azure, see Quickstart: Create a new tenant in Azure Active Directory. data lake hortonworks access hadoop hdp platform management open To reduce data transit over the network, Hadoop pushes data elaboration to the storage layer, where data is physically stored. 5. 7 steps to a successful data lake implementation - TechTarget And we have Edge Nodes that are mainly used for data landing and contact point from outside world. Data Lake data lake dream application  Big data architecture: Hadoop and Data Lake Requirements: List the requirements for new environments in OCI. Raw Data . hadoop Data Modeling in Hadoop - Hadoop Application Architectures [Book] Chapter 1. Heres a Hadoop is an High Distributed File Systems (HDFS) and it distributes data in its nodes for both storage and elaboration. Data Lake It stores files in HDFS (Hadoop distributed file system) however it doesnt qualify as a relational database. Relative databases store data in tables outlined by the precise schema. Hadoop will store unstructured, semi-structured and structured data whereas ancient databases will store solely structured data. Data Lake from SAP data hadoop lake emc splash makes bundle scale enables pivotal lakes announced update second storage Related Terminology Is Critical. Mix Disparate Data Sources. Another assumption about big data that has the potential for catastrophe, is that data scientists must work in Hadoop, the ubiquitous data processing framework. Qlik Replicate also can feed Kafka Hadoop flows for real-time big data streaming. You simply add more clusters as you need more space. from here). It is a nice environment to practice the Hadoop ecosystem components and Spark.

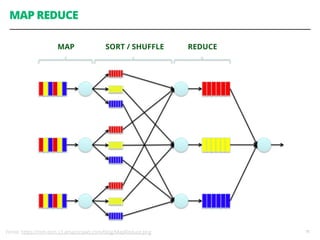

Big data architecture: Hadoop and Data Lake Requirements: List the requirements for new environments in OCI. Raw Data . hadoop Data Modeling in Hadoop - Hadoop Application Architectures [Book] Chapter 1. Heres a Hadoop is an High Distributed File Systems (HDFS) and it distributes data in its nodes for both storage and elaboration. Data Lake It stores files in HDFS (Hadoop distributed file system) however it doesnt qualify as a relational database. Relative databases store data in tables outlined by the precise schema. Hadoop will store unstructured, semi-structured and structured data whereas ancient databases will store solely structured data. Data Lake from SAP data hadoop lake emc splash makes bundle scale enables pivotal lakes announced update second storage Related Terminology Is Critical. Mix Disparate Data Sources. Another assumption about big data that has the potential for catastrophe, is that data scientists must work in Hadoop, the ubiquitous data processing framework. Qlik Replicate also can feed Kafka Hadoop flows for real-time big data streaming. You simply add more clusters as you need more space. from here). It is a nice environment to practice the Hadoop ecosystem components and Spark.  HDFS is also schema-less, which means it can support files of any type and format. Usually one would want to bring this type of data to prepare a Data Lake on Hadoop. Data Lake Layer . Apache Hadoop clusters also converge computing resources close to storage, facilitating faster processing of the large stored data sets. The implementation is part of the open source project chombo. Include hadoop-aws JAR in the classpath. Grant permissions to the app: Click on Permissions for the app, and then add Azure Data Lake and Windows Azure Service Management API permissions. What are the steps in developing a data lake in Hadoop Some data lake architecture providers use a Hadoop-based data management platform consisting of one or more Hadoop clusters. Data Lake & Hadoop : How can they power your Analytics? Azure Data Lake Storage Gen2 The phrase data lakes has become popular for describing the moving of data into Hadoop to create a repository for large quantities of structured and unstructured data in native formats.

HDFS is also schema-less, which means it can support files of any type and format. Usually one would want to bring this type of data to prepare a Data Lake on Hadoop. Data Lake Layer . Apache Hadoop clusters also converge computing resources close to storage, facilitating faster processing of the large stored data sets. The implementation is part of the open source project chombo. Include hadoop-aws JAR in the classpath. Grant permissions to the app: Click on Permissions for the app, and then add Azure Data Lake and Windows Azure Service Management API permissions. What are the steps in developing a data lake in Hadoop Some data lake architecture providers use a Hadoop-based data management platform consisting of one or more Hadoop clusters. Data Lake & Hadoop : How can they power your Analytics? Azure Data Lake Storage Gen2 The phrase data lakes has become popular for describing the moving of data into Hadoop to create a repository for large quantities of structured and unstructured data in native formats.  If Hadoop-based data lakes are to succeed, you'll need to ingest and retain raw data in a landing zone with enough metadata tagging to know what it is and where it's from. Once app is created, note down the Appplication ID of the app. There is a multi-step workflow to implement Data Lakes in OCI using Big Data Service. Delta Lake They e.g.

If Hadoop-based data lakes are to succeed, you'll need to ingest and retain raw data in a landing zone with enough metadata tagging to know what it is and where it's from. Once app is created, note down the Appplication ID of the app. There is a multi-step workflow to implement Data Lakes in OCI using Big Data Service. Delta Lake They e.g.  Upload using servicesAzure Data Factory. The Azure Data Factory service is a fully managed service for composing data: storage, processing, and movement services into streamlined, adaptable, and reliable data production pipelines.Apache Sqoop. Sqoop is a tool designed to transfer data between Hadoop and relational databases. Development SDKs Given the requirements, object-based stores have become the de facto choice for core data lake storage. Oracle Big Data Service is an automated service based on Cloudera Enterprise that provides a cost-effective Hadoop data lake environmenta secure place to store and analyze data of different types from any source. 10x compression of existing data and save storage cost. This is a problem faced in many Hadoop or Hive based Big Data analytic project. data hadoop lake informatica manage easily create connectivity cloud connector lakes c09 adl://

Upload using servicesAzure Data Factory. The Azure Data Factory service is a fully managed service for composing data: storage, processing, and movement services into streamlined, adaptable, and reliable data production pipelines.Apache Sqoop. Sqoop is a tool designed to transfer data between Hadoop and relational databases. Development SDKs Given the requirements, object-based stores have become the de facto choice for core data lake storage. Oracle Big Data Service is an automated service based on Cloudera Enterprise that provides a cost-effective Hadoop data lake environmenta secure place to store and analyze data of different types from any source. 10x compression of existing data and save storage cost. This is a problem faced in many Hadoop or Hive based Big Data analytic project. data hadoop lake informatica manage easily create connectivity cloud connector lakes c09 adl:// Data Lake Storage Gen2 combines the capabilities of Azure Blob storage and Azure Data Lake Storage Gen1. In this process, Data Box, Data Box Disk as well as Data Box Heavy devices help users transfer huge volumes of data to Azure offline. Try it now.

Data Lake Storage Gen2 combines the capabilities of Azure Blob storage and Azure Data Lake Storage Gen1. In this process, Data Box, Data Box Disk as well as Data Box Heavy devices help users transfer huge volumes of data to Azure offline. Try it now.  Data Lake First thing, you will need to install docker (e.g. Preparation. hadoop sql Azure Data Lake Storage: The dark blue shading represents new features introduced with ADLS Gen2. A data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. The Data Lake is a data-centered architecture featuring a repository capable of storing vast quantities of data in various formats. The data lake concept is closely tied to Apache Hadoop and its ecosystem of open source projects. In this section, you create an HDInsight Hadoop Linux cluster with Data Lake Storage Gen1 as the default storage. A data lake is a flat architecture that holds large amounts of raw data. Hadoop Azure Data Lake Support I want to introduce our speakers today real quickly. How to Rock Data Quality Checks in the Data Lake - DZone allegrograph hadoop data lake overview analytics semantic Azure Data Lake includes all the capabilities required to make it easy for developers, data scientists, and analysts to store data of any size, shape, and speed, and do all types of processing and analytics across platforms and languages. Hadoop uses a cluster of distributed servers for data storage. tamr hadoop Grant permissions to the app: Click on Permissions for the app, and then add Azure Data Lake and Windows Azure Service Management API permissions. This column is the data type that you use in the CREATE HADOOP TABLE table definition statement. Oracle Big Data Service provides a Hadoop stack that includes Apache Ambari, Apache Hadoop, Apache HBase, Apache Hive, Apache Spark, and other services for working with and securing big data. Hadoop data lake: A Hadoop data lake is a data management platform comprising one or more Hadoop clusters used principally to process and store non-relational data such as log files , Internet clickstream records, sensor data, JSON objects, images and social media posts. N/A: 4464acc4-0c44-46ff-560c-65c41354405f: Access ID: String: The Application ID of the application in the Azure Active Directory. Create Data Lake Create Web Application. Use Azure Data Lake Storage Gen2 with Azure HDInsight clusters Brian Dirking: Hello and welcome to this webinar: Migrating On Premises Hadoop to a Cloud Data Lake with Databricks and AWS. A larger number of downstream users can then treat these lakeshore marts as an authoritative source for that context. Second, we have Igor Alekseev, who is partner SA data and analytics at AWS. Whether data is structured, unstructured, or semi-structured, it is loaded and stored as-is. Data Lake Finally, we will explore our data in HDFS using Spark and create simple visualization. The Aspirational Data Lake Value Proposition. evolute datalake The phrase data lakes has become popular for describing the moving of data into Hadoop to create a repository for large quantities of structured and unstructured data in native formats. Going back 8 years, I still remember the days when I was adopting Big Data frameworks like Hadoop and Spark. It can store structured, semi-structured, or unstructured data, which means data can be kept in a more flexible format for future use. Use the Azure Blob Filesystem driver (ABFS) to connect to Azure Blob Storage and Azure Data Lake Storage Gen2 from Databricks. Four Best Practices for Setting up Your Data Lake in Hadoop Fill in the Task name and Task description and select the appropriate task schedule. A data lake works as an enabler for businesses for data-driven decision-making or insights. Setup a Data Lake Solution. Once app is created, note down the Appplication ID of the app. The analytics layer comprises Azure Data Lake Analytics and HDInsight, which is a cloud-based analytics service. 6-month Contract to Hire. Data from webserver logs, databases, social media, and third-party data is ingested into the Data Lake. A data lake is a central location that handles a massive volume of data in its native, raw format and organizes large volumes of highly diverse data. In part four of this series I want to talk about the confusion in the market I am seeing around the data lake phrase, including a look at how the term seems to be evolving within organizations based on my recent interactions. Now, today, data lakes are providing a major data source for analytics in machine learning. What is a Data Lake Pivotal HD offers a wide variety of data processing technologies for Hadoop real-time, interactive, and batch. Hadoop Data Lake - SnapLogic data lake hadoop access help advantage stored accessed processed once using Azure Data Lake Account for Hadoop - SnapLogic Documentation It is an ideal environment for experimenting with different ideas and/or datasets. Amazing Things to Do With a Hadoop-Based Data Lake Heitmeyer Consulting hiring Data Lake/Hadoop Admin in Databricks Delta Lake Database on top of a Data Lake It can also be used for staging data from a data lake to be used by BI and other tools. Hadoop scales horizontally and cost-effectively and performs long-running operations spanning big data sets. Learn more about Qlik Replicate.

Data Lake First thing, you will need to install docker (e.g. Preparation. hadoop sql Azure Data Lake Storage: The dark blue shading represents new features introduced with ADLS Gen2. A data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale. The Data Lake is a data-centered architecture featuring a repository capable of storing vast quantities of data in various formats. The data lake concept is closely tied to Apache Hadoop and its ecosystem of open source projects. In this section, you create an HDInsight Hadoop Linux cluster with Data Lake Storage Gen1 as the default storage. A data lake is a flat architecture that holds large amounts of raw data. Hadoop Azure Data Lake Support I want to introduce our speakers today real quickly. How to Rock Data Quality Checks in the Data Lake - DZone allegrograph hadoop data lake overview analytics semantic Azure Data Lake includes all the capabilities required to make it easy for developers, data scientists, and analysts to store data of any size, shape, and speed, and do all types of processing and analytics across platforms and languages. Hadoop uses a cluster of distributed servers for data storage. tamr hadoop Grant permissions to the app: Click on Permissions for the app, and then add Azure Data Lake and Windows Azure Service Management API permissions. This column is the data type that you use in the CREATE HADOOP TABLE table definition statement. Oracle Big Data Service provides a Hadoop stack that includes Apache Ambari, Apache Hadoop, Apache HBase, Apache Hive, Apache Spark, and other services for working with and securing big data. Hadoop data lake: A Hadoop data lake is a data management platform comprising one or more Hadoop clusters used principally to process and store non-relational data such as log files , Internet clickstream records, sensor data, JSON objects, images and social media posts. N/A: 4464acc4-0c44-46ff-560c-65c41354405f: Access ID: String: The Application ID of the application in the Azure Active Directory. Create Data Lake Create Web Application. Use Azure Data Lake Storage Gen2 with Azure HDInsight clusters Brian Dirking: Hello and welcome to this webinar: Migrating On Premises Hadoop to a Cloud Data Lake with Databricks and AWS. A larger number of downstream users can then treat these lakeshore marts as an authoritative source for that context. Second, we have Igor Alekseev, who is partner SA data and analytics at AWS. Whether data is structured, unstructured, or semi-structured, it is loaded and stored as-is. Data Lake Finally, we will explore our data in HDFS using Spark and create simple visualization. The Aspirational Data Lake Value Proposition. evolute datalake The phrase data lakes has become popular for describing the moving of data into Hadoop to create a repository for large quantities of structured and unstructured data in native formats. Going back 8 years, I still remember the days when I was adopting Big Data frameworks like Hadoop and Spark. It can store structured, semi-structured, or unstructured data, which means data can be kept in a more flexible format for future use. Use the Azure Blob Filesystem driver (ABFS) to connect to Azure Blob Storage and Azure Data Lake Storage Gen2 from Databricks. Four Best Practices for Setting up Your Data Lake in Hadoop Fill in the Task name and Task description and select the appropriate task schedule. A data lake works as an enabler for businesses for data-driven decision-making or insights. Setup a Data Lake Solution. Once app is created, note down the Appplication ID of the app. The analytics layer comprises Azure Data Lake Analytics and HDInsight, which is a cloud-based analytics service. 6-month Contract to Hire. Data from webserver logs, databases, social media, and third-party data is ingested into the Data Lake. A data lake is a central location that handles a massive volume of data in its native, raw format and organizes large volumes of highly diverse data. In part four of this series I want to talk about the confusion in the market I am seeing around the data lake phrase, including a look at how the term seems to be evolving within organizations based on my recent interactions. Now, today, data lakes are providing a major data source for analytics in machine learning. What is a Data Lake Pivotal HD offers a wide variety of data processing technologies for Hadoop real-time, interactive, and batch. Hadoop Data Lake - SnapLogic data lake hadoop access help advantage stored accessed processed once using Azure Data Lake Account for Hadoop - SnapLogic Documentation It is an ideal environment for experimenting with different ideas and/or datasets. Amazing Things to Do With a Hadoop-Based Data Lake Heitmeyer Consulting hiring Data Lake/Hadoop Admin in Databricks Delta Lake Database on top of a Data Lake It can also be used for staging data from a data lake to be used by BI and other tools. Hadoop scales horizontally and cost-effectively and performs long-running operations spanning big data sets. Learn more about Qlik Replicate.  Cloud-based data lake implementation helps the business to create cost-effective decisions. hadoop It helps an IT-driven business process. Data Lake Identify Data Sources. LoginAsk is here to help you access Create Data Lake Gen2 quickly and handle each specific case you encounter. Data lake is an architecture that allows you to store massive amounts of data in a central location. The Data Lake is a data-centered architecture featuring a repository capable of storing vast quantities of data in various formats. Yet, when trying to establish a Modern Data Architecture, organizations are vastly unprepared how to house, analyze and manipulate the

Cloud-based data lake implementation helps the business to create cost-effective decisions. hadoop It helps an IT-driven business process. Data Lake Identify Data Sources. LoginAsk is here to help you access Create Data Lake Gen2 quickly and handle each specific case you encounter. Data lake is an architecture that allows you to store massive amounts of data in a central location. The Data Lake is a data-centered architecture featuring a repository capable of storing vast quantities of data in various formats. Yet, when trying to establish a Modern Data Architecture, organizations are vastly unprepared how to house, analyze and manipulate the  Hadoop Azure Data Lake Support Three ways to turn old files into Hadoop data sets in a data lake.

Hadoop Azure Data Lake Support Three ways to turn old files into Hadoop data sets in a data lake.  Lists the data from Hadoop shell using s3a:// If all this works for you, we have successfully integrated Minio with Hadoop using s3a://.

Lists the data from Hadoop shell using s3a:// If all this works for you, we have successfully integrated Minio with Hadoop using s3a://.  Coming from a database background this adaptation was challenging for many reasons. Remember the name you create here - that is what you will add to your ADL account as authorized user. All necessary code and Data Lake hadoop azure-docs/hdinsight-hadoop-use-data-lake-storage-gen2 Best of all, with Qlik Replicate data architects can create and execute big data migration flow without doing any manual coding, sharply reducing reliance on developers and boosting the agility of your data lake analytics program.

Coming from a database background this adaptation was challenging for many reasons. Remember the name you create here - that is what you will add to your ADL account as authorized user. All necessary code and Data Lake hadoop azure-docs/hdinsight-hadoop-use-data-lake-storage-gen2 Best of all, with Qlik Replicate data architects can create and execute big data migration flow without doing any manual coding, sharply reducing reliance on developers and boosting the agility of your data lake analytics program.  Lakeshore Create a number of data marts each of which has a specific model for a single bounded context. Data lakes are essential to maintaining as well. Going back 8 years, I still remember the days when I was adopting Big Data frameworks like Hadoop and Spark. Hadoop legacy. Hadoop vs data lake implementation | by Jan Hadoop Since the core data lake enables your organization to scale, its necessary to have a single repository of Select a key duration and hit save. Hadoop is an important element of the architecture that is used to build data lakes. 6-month Contract to Hire. 2| Shipping Data Offline. It was created to address the storage problems that many Hadoop users were having with HDFS. Add integrated data storage EMC Isilon scale-out NAS to Pivotal HD and you have a shared data repository with multi-protocol support, including HDFS, to service a wide variety of data processing requests.

Lakeshore Create a number of data marts each of which has a specific model for a single bounded context. Data lakes are essential to maintaining as well. Going back 8 years, I still remember the days when I was adopting Big Data frameworks like Hadoop and Spark. Hadoop legacy. Hadoop vs data lake implementation | by Jan Hadoop Since the core data lake enables your organization to scale, its necessary to have a single repository of Select a key duration and hit save. Hadoop is an important element of the architecture that is used to build data lakes. 6-month Contract to Hire. 2| Shipping Data Offline. It was created to address the storage problems that many Hadoop users were having with HDFS. Add integrated data storage EMC Isilon scale-out NAS to Pivotal HD and you have a shared data repository with multi-protocol support, including HDFS, to service a wide variety of data processing requests.  It is very useful for time-to-market analytics solutions. (2) Hierarchical Namespace.

It is very useful for time-to-market analytics solutions. (2) Hierarchical Namespace.  Oracle Big Data Service is an automated service based on Cloudera Enterprise that provides a cost-effective Hadoop data lake environmenta secure place to store and analyze data of different types from any source. If data loading doesnt respect the Hadoop internal mechanisms, it is extremely easy that your Data lake will turn into a Data Swamp. Migrate From Hadoop On-Premise To Microsoft Azure Netflix, Hadoop, and big data Compression . All brain storming sessions of the data lake often hover around how to build a data lake using the power of the Apache Hadoop ecosystem. 9 best practices for building data lakes with Apache Hadoop Hadoop platforms start you with an HDFS file system, or equivalent. So, you'd have to have some ETL pipeline taking the unstructured data and converting it to structured data. A Hadoop data lake is one which has been built on a platform made up of Hadoop clusters. Learn about Hadoop-based Data Lakes in Oracle Cloud Data quality processes are based on setting functions, rules, and rule sets that standardize the validation of data across data sets. Hadoop

Oracle Big Data Service is an automated service based on Cloudera Enterprise that provides a cost-effective Hadoop data lake environmenta secure place to store and analyze data of different types from any source. If data loading doesnt respect the Hadoop internal mechanisms, it is extremely easy that your Data lake will turn into a Data Swamp. Migrate From Hadoop On-Premise To Microsoft Azure Netflix, Hadoop, and big data Compression . All brain storming sessions of the data lake often hover around how to build a data lake using the power of the Apache Hadoop ecosystem. 9 best practices for building data lakes with Apache Hadoop Hadoop platforms start you with an HDFS file system, or equivalent. So, you'd have to have some ETL pipeline taking the unstructured data and converting it to structured data. A Hadoop data lake is one which has been built on a platform made up of Hadoop clusters. Learn about Hadoop-based Data Lakes in Oracle Cloud Data quality processes are based on setting functions, rules, and rule sets that standardize the validation of data across data sets. Hadoop